Distributed Circuit Breakers at Hipmunk

How we implemented Circuit Breakers at Hipmunk to automatically deal with third party outages.

by Danver Braganza on 2021-01-17

We live in an ever-evolving universe where the only constant is change. When I worked at Hipmunk, the engineering team there was very sensitive to this fact of life. As a meta-search site for flights, hotels, cars and packages, the successful functioning of our site relied upon the success rates of our API calls to our partners and data providers.

Hipmunk would also flesh out these raw results with other rich data related to the product which come from other third-party services. We might connect to APIs to geocode locations, get weather information, determine extra amenities, ratings and images.

Hipmunk was not unique in the way we put together external services to build a new type of value for the user. Today’s trend towards increasing specialization and interconnectedness of services means that so many other engineering teams find themselves in the same boat, dependent on the health of third-party partners.

This reliance on external services exposes us to the risk of any one of them failing or timing out. Most applications experience a third-party failure as a burst of warnings in the logs. Such a outage might at worst lead to service disruptions for our users, and may page a human to wake up and look for a manual intervention to remedy the outage.

Calls to external dependencies can be coded to handle their errors, and return a safe default. However, coding around dependency failures required a large amount of boilerplate. Furthermore, if one of your providers goes down at 5 a.m., resulting in a flood of warnings in the logs, the engineer on call will need to be paged. A flood of such warnings cannot be ignored, because amidst this noise you could miss something more critical.

At Himpunk, the standard playbook for an engineer on duty and responding to such a page, is to log in to our control panel and disable the offending service. In most cases, issues with the upstream provider naturally resolve themselves after a few hours, at which point we would verify the service’s health and re-enable it.

Although this process was as easy as a few clicks within an internal tool at Hipmunk, this is a manual endeavour that can be frustrating for the person on call. Read on to find out how Circuit Breakers help us automate these steps, freeing up valuable developer time and leading to page-free sleep.

Introduction to Circuit Breakers

What is a circuit breaker? It’s a guard on all calls to a distinct service. This guard monitors invocations to the service, and tracks their successes and failures. Logically, the guard state can be viewed as a state machine.

Now, the conventional literature on Circuit Breakers is awash with terms like “open”, “closed” and “half-open”, which are inspired by the metaphor of an actual solenoid circuit breaker that trips to open a circuit.

At Hipmunk, we found this terminology to be more confusing than helpful in communicating and reasoning about the state of a circuit. Calling a circuit “open” if it has failed and “closed” if it is working makes sense if you really buy into the circuit metaphor. It’s equally possible for developers unfamiliar with the literature to subconsciously carry a valve metaphor instead, and expect exactly the reverse to be true.

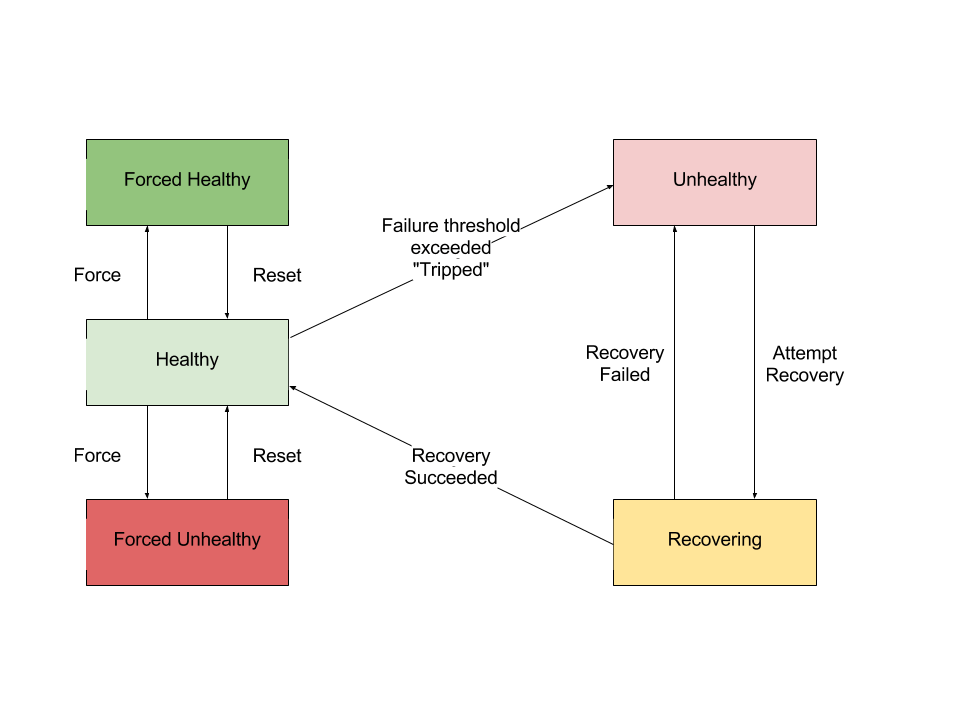

So, we came up with the following terminology for the states of the state machine.

Healthy: This is the “default” state for a Circuit Breaker. A Healthy circuit represents a service that is responding successfully to a sufficiently high proportion of requests. We’re confident in sending that service 100% of our request load.

Unhealthy: The mirror opposite of Healthy. An Unhealthy circuit has recently had too many failures recently, and all remote calls to this service are disabled. Any code which attempts to call this service will instantly receive a fallback value.

Forced Healthy and Forced Unhealthy: These are two auxiliary states that we added to allow us manual, fine-grained control over services. Maintainers of the site can manually use these states in situations where they want to override the normal behaviour of the monitor. The intent here is not to use these as feature flags for services. Instead, we can use these states to more accurately adapt to temporary conditions.

Recovering: When a circuit is in the recovering state, it means that it has spent enough time in the unhealthy state, and now we want the circuit to attempt to self-heal. To fully understand how recovery works, we need to understand the transitions between the various states.

The easiest transitions to understand are between healthy and the forced states, since they are always prompted by the input of an engineer. An engineer can force a circuit from any state to forced-healthy or forced-unhealthy (the transition lines from unhealthy and recovering to the forced states have been left out of the diagram for simplicity). A forced state can always be reset to healthy.

Next comes the transition from healthy to unhealthy. When the ratio of failed calls to successful ones exceeds a critical threshold, the decision is made to transition the service to the ‘unhealthy’ state.

After a certain threshold of requests has exceeded, or time has passed in the ‘unhealthy’ state, a circuit automatically transitions into the recovering state. Here, we once again route live traffic through this circuit, but only for a small number of requests.

The success ration of these live requests is used to judge if the service downtime has been resolved. The margin of proof to make this judgement is higher than the level we require to take a service from healthy to unhealthy. This prevents most services from “flapping” by transitioning from healthy to unhealthy to recovering to healthy due to random variation in their results. If the service meets this high bar, the circuit transitions to healthy again, and the incident has ended.

Conversely, if the service is still undergoing difficulties, we send the circuit back to the Unhealthy state. We use a backoff algorithm to ensure that we wait longer than the previous time before we check the service again.

Architecture of the Circuit Breaker System

When we architected Circuit Breakers at Hipmunk, we built it to fit in with the rest of the backend services. We wanted to build circuit breakers while reusing many of the components already present in our infrastructure. This was a major contributor to the success of this project, and I recommend that if you are inspired to implement circuit breakers in your own system, you adapt it similarly to your own environment.

Hipmunk’s backend servers were organized according to a differentiated monolith pattern. The same Python codebase was deployed on all our app servers, but the types of requests each server handled was segmented at the load balancer. This allowed us the simplicity of development and deployment that a monolith brings, and at the same time allowed us to tune server numbers and their specific hardware to the workloads we expect them to serve.

All of Hipmunk’s servers used a distributed event-driven messaging system to publish server events. These include info, warning and error logs, but also encompassed other more semantic events. Any server could publish events into this system, and any other server could subscribe to these events in (near) real-time.

Hipmunk’s application servers and other services also had access to Redis for short-term storage. We used Redis heavily to cache database queries, expensive computations, and to debounce third-party queries.

Finally, we had Apache Zookeeper as a distributed store of configuration for the various services within Hipmunk. We used Zookeeper as the source of truth for configuration that we would like to change at runtime without necessitating a deploy—such as feature flags.

These are the main pieces of infrastructure that played a part in the implementation of distributed Circuit Breakers.

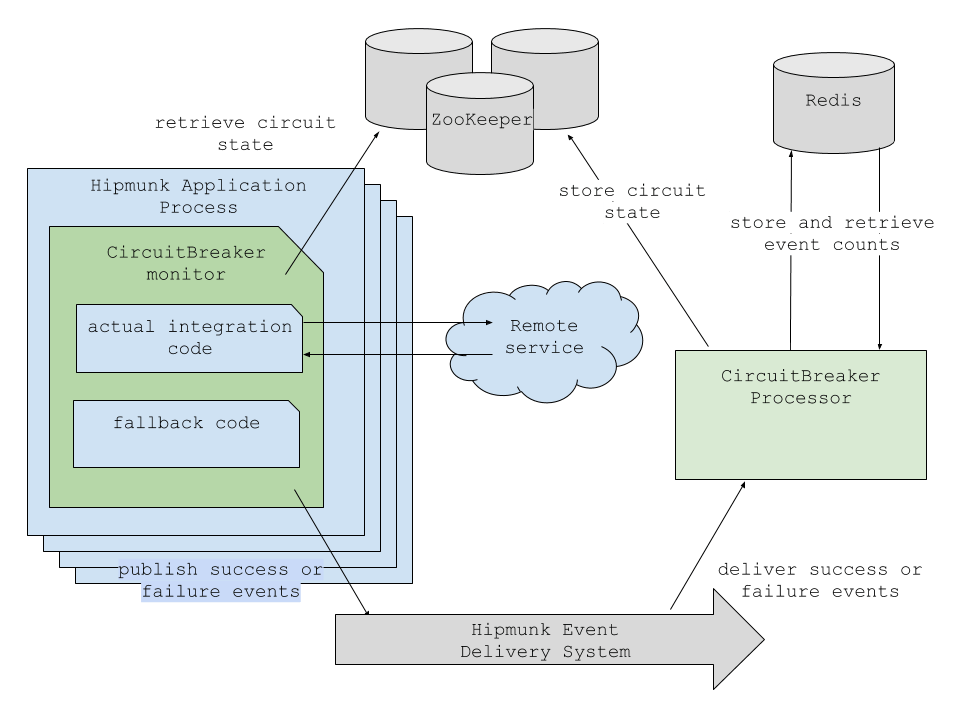

You can conceptually decompose the circuit breaker system into two components–a monitor and a processor.

The monitor was made available to all the other code running in the system as a software library, and could run on any application server. Whenever a call is made to a service that is protected by a circuit breaker, the monitor retrieved the current state for that service’s key from ZooKeeper. If ZooKeeper said that the circuit’s state is healthy, the monitor ran the code.

One requirement we set ourselves was to ensure that if ZooKeeper was unavailable, (or if for some other reason the circuit breaker code was unable to complete its book-keeping), the monitor would default to assuming it was healthy. It is far safer to err on the side of conservatism and run all the services all the time, than to accidentally disable all our services because some component temporarily is unreachable.

The monitor then called the actual code to interact with the remote service. The code had a couple of ways it could signal to the monitor whether it was successful or not. The simplest was by raising an exception, which the monitor would detects and momentarily intercept. Whenever it detects a failure, the monitor fires an event signifying a failure, and then reraises the exception. If no error was detected, the monitor would send an indicator of success to the event stream instead.

If the monitor detected that the state within ZooKeeper had changed to unhealthy, it now omitted running the inner code, and instead called the fallback. It would still log an event that the function was called, so that the processor is aware that the call is made. This helps the processor determine when a sufficient number of requests have been skipped, so that it can move the circuit back into recovery.

In this way, we maintained the simplicity of the monitor code. Its only two operations were to read from ZooKeeper, and to write to our distributed event system. This is highly appropriate for code that might be called all over our servers in a number of different contexts, some performance-sensitive.

The management of the state of the different circuits is handled by the circuit breaker processor. The processor runs in a process that subscribed to the success and failure events logged from all the monitors. The processor maintained a running count of success and failure events that occurred within the last window within Redis, as well as a timestamp of the last time each circuit changed state.

All of the logic around state transitions can thus be encapsulated within the processor. It is the processor’s job to react to events by consulting Redis, updating the counts, and then updating ZooKeeper if a state transition is required. We also set up our processor to send an email out to our alerts mailing list whenever a circuit breaker tripped or recovered.

Of course, having just one circuit breaker processor made for a single point of failure. We got around this by running a backup processor which was configured for failover if the primary processor ever goes down. We also maintained a pager alert that would trigger if no circuit breaker events were processed in a set amount of time.

The last part of this system was the Circuit Breaker admin panel or dashboard, which engineers within Hipmunk would use to view the state of all circuit breakers and make adjustments in real time.

The API

We wanted to keep the API as simple as possible to encourage the adoption of circuit breakers across the engineering organization.

A user of the monitor may instantiate their Circuit Breaker with a unique string key that identifies the service. We have a convention that these strings are dotted and follow the structure ‘company.product.service’. e.g.

# This circuit breaker monitors our own

# Natural Language Processing for hotels service.

circuit_breaker = CircuitBreaker('hipmunk.nlp.hotels')

While other circuit breaker libraries might allow for a myriad of bewildering configuration options (trip on number of concurrent failures, trip on ratio of failures, time to recovery, history window size and so forth), we defined a very small set of customization options—and carefully picked defaults for the other options so that, to date, no user has asked to change them.

The circuit breaker object could be used as a decorator for your function:

@circuit_breaker

def my_func(self):

# the following piece of code might raise an exception if it fails

response = self.make_remote_service_call(timeout=timedelta(seconds=2))

if not response.json['success']:

raise Exception('Any exception raised here will log a failure.')

return response.json

When this circuit breaker has tripped, you may want to run a piece of code as a fallback—to return a sane default. This is precisely how easy it is to set that up:

@my_func.fallback

def my_func_fallback(self):

log.debug(

'The circuit has tripped, and so we are returning something safe.')

return {'success': False}

And that, for the most part, is all there is to it. We also defined a couple of additional methods, circuitbreaker.async and circuitbreaker.async_fallback, since our codebase made extensive use of Tornado’s coroutines, but that is almost an implementation footnote at this point.

Takeaways

Of course, I am sad to remember that Hipmunk is no more. However, our engineering investment into Circuit Breakers paid itself off in a very short amount of time. The rollout of circuit breakers meant that we no longer had to respond to intermittent errors of our partners and that we were able to keep bursts of errors out of our logs. As the amount of unplanned work our on-call engineers had to deal with went down, the productivity and morale of the team was greatly improved.

The key factor that allowed us to rapidly build this tool was to reuse the technologies we were already using within Hipmunk. To facilitate easy adoption, we focused on making the interface for developers using this technology as simple as possible, even at the expense of customizability.

Finally, having a set of emails and a control panel increased the visibility of the internal state of this tool, and built confidence and reliance of other developers upon this tool.

I would like to credit Kevin Hornschemeier and Zak Lee for their work and input into the Circuit Breakers project.

Other articles you may like

- Misapplying LazyRecursiveDefaultDict A cautionary tale of how I misapplied the wrong software tool to a problem, and what I've learned from it.

- Death is Truth: Why Post-Mortems Work How to successfully perform the Rite of AshkEnte

- Estimates, Design and the Payoff Line How inaccuracies in estimation lead to a systematic undervaluing of the importance of Design