Improving My Toothbrush, an Alexa Skill, with Go and the help of AI tools

How I leveraged Go, GPT-4 and DALL-E to improve a simple toothbrush timer Alexa Skill

by Danver Braganza on 2023-03-19

Introduction

This was another rainy weekend in San Francisco, so I used the time to release an update to My Toothbrush, an Alexa Skill that guides users through a 2-minute tooth-brushing session. I’d originally coded this basics of this skill at an Amazon Developer Workshop, and I’ve added to it very infrequently over the years. In this article, I will describe what the skill does, how I built it, and then how I improved it this weekend using ChatGPT-4 and DALL-E.

What the app does

The skill itself is fairly simple. You can enable it yourself, if you have access to an Alexa device right now. You just have to say,

Alexa, start my toothbrush

and Alexa will enable and launch the skill. Once you are ready, she will guide you through a 2-minute tooth-brushing session, calling out when it’s time to move to different areas of your mouth. She will also break up the monotony by announcing facts about teeth, such as:

Did you know that the blob of toothpaste you put on the bristles of the brush is called a nurdle?

And that’s about it. It’s a pretty simple skill that does what it sets out to do. I really enjoyed building it, and it has nearly 200 monthly active users.

How I originally built it

In the original workshop where I built the skeleton of My Toothbrush, we were supposed to be building a generic “facts”-style skill called Space Facts. Whenever a user would ask the skill for a space fact, it would randomly index into a list of about 20 facts about space, and respond with a single fact that Alexa would then read aloud.

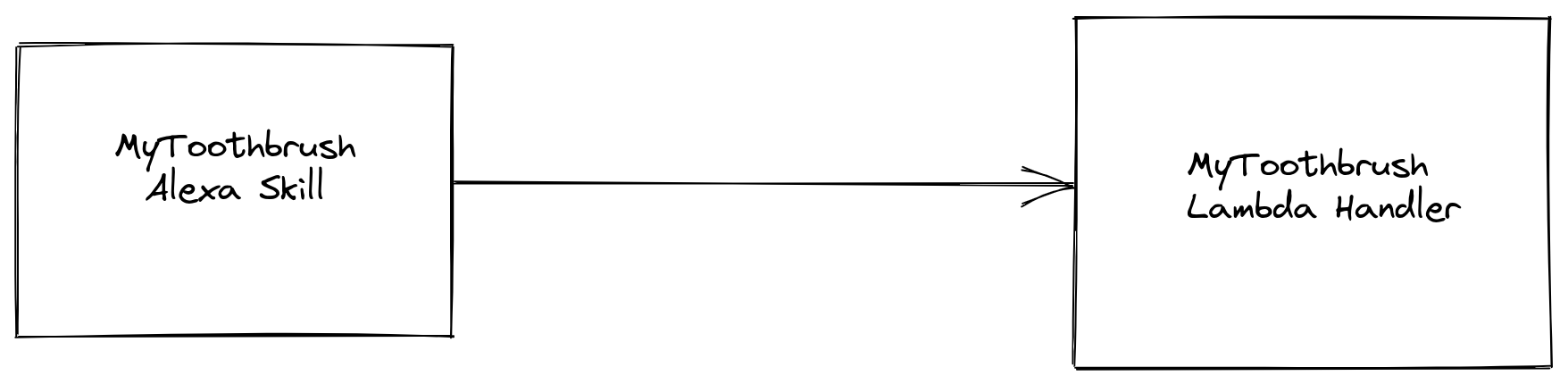

The architecture of the skill was fairly basic, but leveraged several of Amazon’s technologies: using the Alexa Developer Console to configure the application, and then using AWS Lambda to serve as the backend.

We configured the Skill part of the system first, in the Alexa Developer Console. This step involved defining a simple speech model using Intents and Slots. Intents are the actions you want your application to handle, while Slots are the parameters to those actions.

The next step was to connect this Skill with a backend–and of course Amazon recommended that we use Lambda for the job. We used a pre-built lambda template that was already set up for Space Facts, and all we needed to do was edit the JavaScript code directly in the Lambda Code Editor to modify the facts and get it set up.

I think this looks like the original tutorial that we followed. It’s out of date now already, but that page has a link to the new way to get started.

I was surprised to learn that the most labour-intensive steps of building this app turned out to be researching a set of tooth facts to put into it. I spent a lot of time reading through Wikipedia pages on Human teeth, Dental Plaque and other such things, trying to come up with a good list. I mention this because it will become relevant later.

How I complicated the implementation of my own skill

The initial version of my app was written as a single file of JavaScript code, which I directly edited in a text box in the Lambda configuration page. Of course, I wanted to have the ability to store the code on my local computer and edit it with my favourite editor. I also personally avoid writing JavaScript unless I’m being paid for it, and so I wanted to write the body of the Skill in Go.

However, at that time there wasn’t much support for Alexa skill development in Go. Although Lambda support for Go was launched in January 2018, I wasn’t able to find a comparable Go SDK that would enable me to develop an Alexa Skill.

So I devised a cunning plan to write my skill in Go using GopherJS bindings to the Alexa SDK. Then I would use GopherJS to compile my skill, and host it on the same NodeJS runtime in Lambda. So that was how I built it.

I ended up being pretty happy with what I’d done. I’d check in on my little project from time to time, and see how many people were using it. I noticed that there was a persistent error rate, and that the P-90 latency was around 6000 ms, but I thought this was good for a Lambda.

How I improved it

Fast forward to this weekend, when I realized that it was high time for an update. I’m not a consistent dog-fooder of my own toothbrush skill, but I do use it occasionally, and I have to say, hearing the same facts over and over again was getting tedious.

So, I went looking for my Go code and GopherJS pipeline. Imagine my consternation when I realized I couldn’t find it anywhere. I searched multiple laptops, hard-drive partitions, removable USB disks. Eventually, I was forced to conclude that I’d lost the repository where this code had existed by not uploading it to the cloud.

Well, the Lambda was still live, and still serving a zip of the JavaScript code. The only problem was that this was a zip of minified GopherJS code. I was able to access this zip and extract the contents of the tooth facts, but I would have to reconstruct the structure of the skill again.

In the meanwhile, SDKs for Go Development of Alexa Skills had been published, so I set about building a native Go lambda. This took me a surprisingly short amount of time, and I was at feature parity with the old version of my app within just a few hours.

Using AI to polish my app

The next challenge I wanted to solve was to extend my meager set of tooth facts from 20 to around 60. GPT-4 had just been released earlier this week, and so I set about prompting it to generate a set of facts for me. This was a major time-saver, because all I had to do was to review the facts afterwards for correctness. I’m really impressed with the quality of ChatGPT-4’s output because for every fact I was able to find a plausible citation.

I did not need to perform a lot of prompt engineering to get a result I was satisfied with. My prompt was something like:

Please help me expand the following list of facts about teeth, keeping them fun and informative and suitable for a family audience.

// Existing list of facts here

I did need to delete or edit a couple of facts that it generated because I either didn’t trust the citation, or I thought that the fact in question was unclear or uninteresting.

I also used DALL-E 2 to generate a nice new icon for my skill. I had hand-drawn the previous icon in a few minutes, so I wanted to put together something better.

Here is a small set of the iterations I went through on variations of the prompt,

A blue toothbrush with toothpaste. Toothbrush has a smiley face, cartoon outline style, program icon style

Here’s the final icon that I picked. I’ve just submitted a request to change the icon for the skill to Amazon, and it will probably be live by the time you’re reading this article.

This article is getting a little long in the tooth

So there you have it. It took me a little bit of elbow grease to get the lambda working and deployable again, and a little bit of AI magic to put on a shiny new coat of paint and additional features. My changes have only been live for about a few days, but I’m already seeing huge improvements.

The most dramatic improvement I can already see is the much shorter Lambda cold start times that the Go runtime provides me. With that, my P-90 latency has dropped from 6000 ms to around 400 ms. In the coming days, I’ll be paying attention to the number of returning users, to see whether the new content leads to more skill activations.

Anyway, it’s time for bed. I’m off to go brush my teeth.